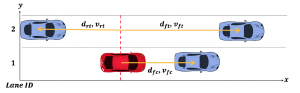

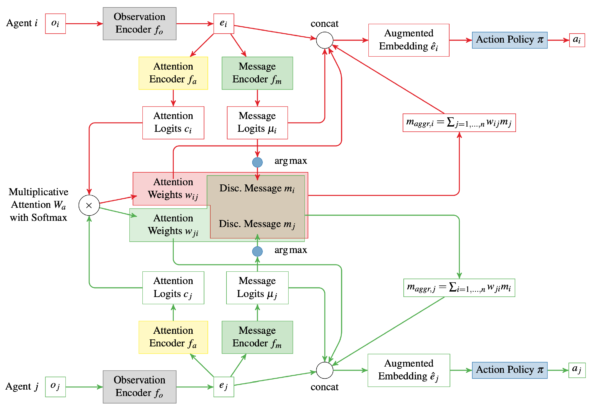

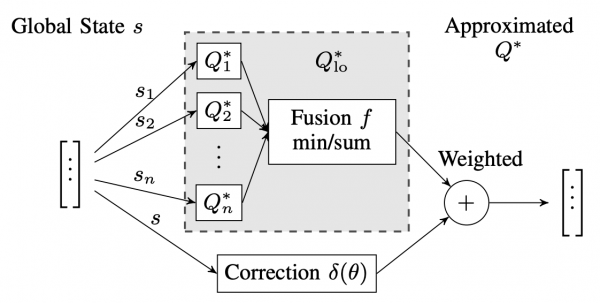

Traffic modeling is one of the top concerns in the field of autonomous driving. The traffic environment usually possesses high degrees of freedom and levels of uncertainty, which make the modeling process difficult. Meanwhile, the balancing among safety, comfort and efficiency greatly affects the control laws. In this project, we propose a driver modeling process and its evaluation results of an intelligent autonomous driving policy, which is obtained through reinforcement learning techniques. Q-learning and deep Q-learning methods are applied to concise but descriptive state and action spaces, assuming a Markov Decision Process (MDP) model. So that a policy is developed within limited computational load. The driver could perform reasonable maneuvers, including acceleration, deceleration and lane-changes, under usual traffic conditions on a two-lane highway. A traffic simulator is also constructed to evaluate a given policy in terms of collision rate, average travelling speed, and lane-change times. Results show that the policy is well trained within reasonable training time. The intelligent driver that follows the policy acts interactively in the stochastic traffic environment, showing a low collision rate and a higher travelling speed than the average speed of the environment vehicles.